The Power of Data-Driven Decision Making for Shopify Stores

Ever watched a store owner make a change to their Shopify site based purely on gut feeling? “I think customers will like this better,” they say, fingers crossed. Two weeks later, sales are down, and they’re scratching their heads wondering what went wrong. Sound familiar?

When it comes to optimizing your Shopify store, guesswork is like throwing darts blindfolded – you might hit something valuable, but odds are, you won’t. A/B testing cuts through the fog of assumptions, replacing hunches with clear, actionable data about what actually drives conversions on your store.

The Critical Role of A/B Testing in Shopify Store Optimization

Let’s face it: in e-commerce, every percentage point matters. A 1% improvement in conversion rate might seem small, but for a store doing $10,000 monthly, that’s an extra $1,200 per year – without spending an additional penny on advertising. Small wins compound. Test after test, improvement after improvement – suddenly, you’re outperforming competitors who are still relying on gut feelings.

Consider what happened to Peter, a fashion retailer who insisted his product pages needed a complete overhaul based on what “felt right.” After spending $3,000 on design changes, his conversion rate actually dropped by 2%. Compare that to Sarah, who ran systematic A/B tests on her store’s call-to-action buttons, checkout process, and product descriptions. Through iterative testing, she achieved a 24% boost in conversions over six months – each change backed by customer behavior data.

The difference? Sarah understood that A/B testing creates a direct line between store changes and business growth. It connects dots between:

- Conversion rate improvements and immediate revenue gains

- Customer experience enhancements and reduced friction

- Systematic optimization and long-term competitive advantage

Common Challenges in A/B Test Analysis for Shopify Stores

Of course, running tests is one thing – but correctly analyzing those tests? That’s where many Shopify merchants stumble. I’ve seen store owners make three critical mistakes repeatedly:

First, they misinterpret statistical significance. “Look! We’re at 80% significance after just two days – let’s implement this change immediately!” Not so fast. Ending tests prematurely often leads to false positives. Real statistical confidence requires adequate sample sizes and proper test duration – especially for smaller stores with limited traffic.

Second, external factors get overlooked. I once consulted with a skincare brand that declared their new product page layout a massive success based on a 30% conversion increase during their test. What they missed? Their test coincided with a major influencer mentioning their product, completely skewing the results. Seasonal variations, marketing activities, and industry trends can all contaminate your findings if not properly accounted for.

Finally, there’s the translation gap – moving from insight to implementation. Data without action is just noise. Too often, merchants get stuck in analysis paralysis or implement partial solutions that fail to capture the full benefit of their test findings. A systematic approach to both analysis and implementation is essential for meaningful results.

In this article, I’ll walk you through how to analyze your A/B tests methodically, avoid common pitfalls, and transform test insights into revenue-generating improvements. By the end, you’ll have a framework for making confident, data-backed decisions that actually move the needle for your Shopify store.

Understanding A/B Test Fundamentals for Accurate Analysis

Before diving into complex analysis techniques, let’s make sure you’re measuring what actually matters. The foundation of effective A/B testing isn’t fancy tools or advanced statistics – it’s knowing precisely which metrics align with your business goals.

Key A/B Testing Metrics for Shopify Stores

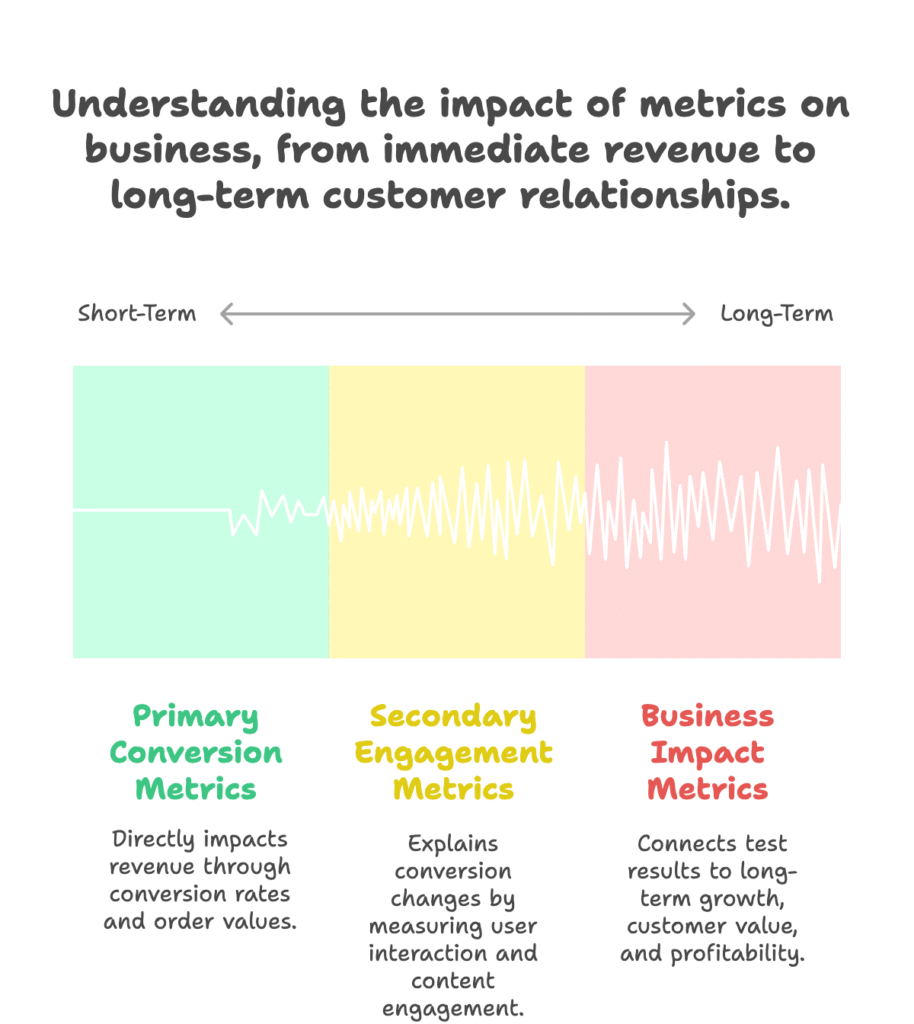

When analyzing test results, not all metrics deserve equal attention.

I organize Shopify metrics into three tiers of importance:

Primary conversion metrics directly impact your bottom line. These are the numbers that translate to dollars and cents:

- Overall conversion rate: The percentage of visitors who complete a purchase

- Add-to-cart rate: How often visitors add products to their cart

- Checkout completion rate: The percentage of cart-starters who actually finish checking out

- Average order value (AOV): How much customers spend per transaction

A product page test might boost your add-to-cart rate by 15%, but if it simultaneously reduces your checkout completion rate by 10%, are you really winning? Always consider the entire conversion funnel.

Secondary engagement metrics help explain the why behind conversion changes:

- Time on page: How long visitors engage with your content

- Bounce rate: The percentage of visitors who leave without taking action

- Click-through rate: How often visitors click specific elements

- Scroll depth: How far down the page visitors typically read

I worked with a supplement store that saw higher conversion rates with their long-form product pages, but couldn’t understand why. Scroll depth analysis revealed the answer: customers who reached the scientific study section near the bottom converted at 3x the rate of those who didn’t. This insight led to reorganizing content to bring studies higher on the page, boosting overall conversions further.

Finally, business impact metrics connect test results to long-term growth:

- Revenue per visitor: Average revenue generated per site visitor

- Return on investment: Testing costs versus revenue gains

- Customer acquisition cost: How test changes affect your cost of acquiring customers

- Customer lifetime value: Long-term customer value changes from improvements

These metrics matter because a test might increase immediate conversions while hurting long-term customer value. I’ve seen “Buy Now” button tests that increased conversion rates but attracted one-time buyers rather than repeat customers, ultimately reducing overall profitability.

Statistical Concepts Essential for Shopify A/B Test Analysis

You don’t need a statistics degree to run effective A/B tests, but understanding a few key concepts will save you from common analysis mistakes.

Statistical significance answers a crucial question: “Is this result due to actual performance differences or just random chance?” When your testing tool declares 95% significance, it’s saying there’s only a 5% probability that the observed difference happened by luck.

For Shopify stores, I recommend targeting at least 95% significance before implementing changes – though this threshold can be adjusted based on how important or risky the change is. A minor text change might only need 90% confidence, while a complete checkout redesign should clear 98%.

Two types of statistical errors often plague test analysis:

- Type I errors (false positives): Believing a variant is better when it’s not

- Type II errors (false negatives): Missing actual improvements because the test didn’t detect them

Both can cost you money. Implementing a false positive means rolling out changes that hurt conversion rates. Missing a true positive means leaving money on the table by not implementing beneficial changes.

Sample size requirements directly impact how long your tests need to run. A common mistake is ending tests too early, especially when early results look promising. For reliable results, you need enough data.

Here’s a quick rule of thumb for minimum sample sizes based on your current conversion rate and the improvement you’re trying to detect:

- 2% baseline conversion rate, detecting a 20% lift: about 25,000 visitors per variant

- 5% baseline conversion rate, detecting a 20% lift: about 10,000 visitors per variant

- 10% baseline conversion rate, detecting a 20% lift: about 5,000 visitors per variant

For most Shopify stores, this translates to running tests for at least two full weeks, capturing all days of the week and accounting for traffic fluctuations. Smaller stores with limited traffic might need a month or more for conclusive results.

Statistical power – the probability that your test will detect an effect if one exists – is equally important. Low-powered tests frequently miss real improvements. For most Shopify tests, aim for at least 80% power, which balances the ability to detect effects against practical test duration constraints.

One often-overlooked consideration is adjusting for multiple testing scenarios. When you test multiple elements simultaneously or look at several metrics, the chance of finding a “significant” result by pure luck increases dramatically. Tools like the Bonferroni correction can help adjust significance thresholds when running multiple tests.

Setting Up for Successful A/B Test Analysis

Analysis quality is determined long before your test ends. The way you structure your tests directly impacts the value of your results. Let’s explore how to set up tests that yield genuinely useful insights.

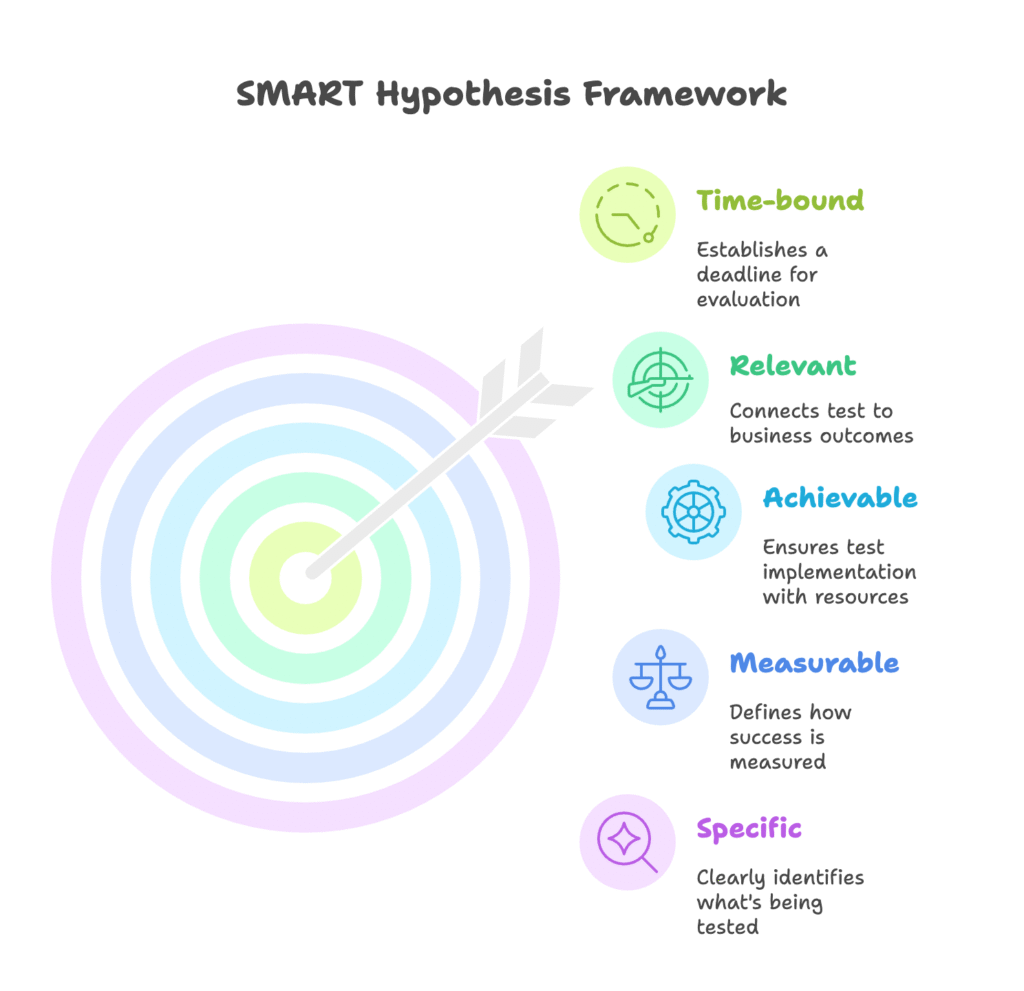

Creating SMART Hypotheses for Meaningful Results

The difference between testing randomly and testing strategically starts with your hypothesis. A vague idea like “let’s test a new button color” yields vague insights.

Instead, formulate SMART hypotheses that connect directly to business goals:

- Specific: Clearly identify what’s being tested and why

- Measurable: Define exactly how success will be measured

- Achievable: Ensure the test can be implemented with available resources

- Relevant: Connect the test to meaningful business outcomes

- Time-bound: Establish when results will be evaluated

Compare these two hypotheses:

Weak: “Changing the button color will improve conversions.”

Strong: “Changing our ‘Add to Cart’ button from blue to orange will increase our add-to-cart rate by at least 10% among mobile users, based on heatmap data showing low visibility of the current button, measured over a two-week period.”

The second hypothesis is testable, specific about what success looks like, and rooted in existing data. It’s also focused on a single variable, making results interpretation straightforward.

Great hypotheses come from data, not hunches. Mine these sources for hypothesis inspiration:

- Analytics insights: Where are visitors dropping off in your funnel?

- Customer feedback: What frustrations do customers mention repeatedly?

- Heatmaps and session recordings: Where do visitors click, scroll, or get stuck?

A client’s customer support team kept hearing “I couldn’t find where to enter my discount code.” Session recordings confirmed customers scrolling up and down the checkout page searching for the coupon field. This led to a simple hypothesis: “Making the coupon field more prominent in checkout will increase conversion rate by reducing abandonment.” The test yielded a 7% conversion lift – all from listening to customers and watching their behavior.

Finally, prioritize your hypotheses based on potential impact, implementation difficulty, and resource requirements. The ICE framework works well for this:

- Impact: How significant could the improvement be?

- Confidence: How certain are you that this change will work?

- Ease: How simple is it to implement and test?

Score each factor from 1-10, then average them to prioritize your testing roadmap. This prevents wasting resources on low-impact tests when higher-potential opportunities exist.

Test Design Considerations That Affect Analysis

The way you structure your test directly impacts the quality of your analysis. These design elements deserve careful attention:

Proper control and variant setup is fundamental. In classic A/B testing, you change just one element at a time. This makes results interpretation clean and straightforward – any difference in performance can be attributed to that single change.

For example, don’t simultaneously change a product description’s content, font, and placement. If you see an improvement, you won’t know which element made the difference. Instead, isolate variables for clear causation.

When you do need to test multiple elements, consider using multivariate testing, which systematically evaluates different combinations. However, these tests require significantly more traffic and longer durations to reach statistical significance.

For traffic allocation, a 50/50 split between control and variant is typically optimal for simple A/B tests. If testing multiple variants, ensure each gets enough traffic for statistical validity – even if that means running the test longer.

Test duration planning prevents premature conclusion or unnecessarily extended tests. I recommend these guidelines:

- Run tests for at least two full weeks to capture all days of the week

- Include full business cycles (often weekly patterns for e-commerce)

- Avoid major holidays and promotional periods that skew normal behavior

- Pre-calculate required sample sizes to determine minimum duration

A fashion retailer once ended a test after just three days because early results showed a “clear winner.” When they implemented the change, performance actually dropped. Why? Their test ran Tuesday through Thursday, missing the completely different shopping behaviors that occur on weekends. Always capture full weekly cycles in your tests.

Segmentation strategies let you uncover insights that overall results might miss. Different visitor segments often respond differently to the same changes. Consider pre-planning analysis for these key segments:

- Traffic source: Do social media visitors respond differently than search visitors?

- New vs. returning: Do first-time visitors prefer different experiences than repeat customers?

- Device type: Do desktop and mobile users show different preferences?

A home goods store found that their product page redesign performed 15% better overall – but when segmented, they discovered it performed 30% better for mobile users while actually hurting desktop conversions by 5%. Instead of implementing for all users, they created device-specific experiences, maximizing the benefit of their test.

Analyzing A/B Test Results in the Shopify Context

You’ve run your test properly – now comes the crucial part: extracting accurate insights that drive real business improvements. Let’s explore how to interpret results correctly and apply advanced analysis techniques specifically relevant to Shopify stores.

Interpreting Statistical Results Correctly

Most testing tools provide dashboards showing performance differences between variants. These reports typically highlight conversion rates, improvement percentages, and statistical significance. But knowing how to read these reports correctly is essential.

First, focus on confidence intervals, not just point estimates. If your variant shows a 15% improvement with a confidence interval of +/- 20%, that means the actual effect could range from a 5% decrease to a 35% increase. Wide confidence intervals indicate uncertainty, often due to insufficient sample sizes.

Second, look for consistency across metrics. If your primary metric (say, checkout completion) shows improvement, but related metrics (like cart abandonment rate) don’t reflect corresponding changes, investigate this discrepancy before implementing changes.

Watch out for these common statistical fallacies:

- Regression to the mean: Extreme early results tend to normalize over time

- Simpson’s paradox: Overall trends can reverse when data is broken into segments

- Peeking bias: Checking results repeatedly increases false positive risk

A supplement company ran a pricing test that initially showed a 25% revenue increase for their higher-priced variant. The CEO wanted to implement immediately, but their analyst insisted on letting the test complete its planned duration. Good thing – by test end, the effect had disappeared completely. This “regression to the mean” happens frequently with early results that look too good to be true.

Not all tests yield clear winners. When facing inconclusive results:

- Consider extending the test if you’re close to significance but need more data

- Abandon tests that show minimal difference after adequate sample sizes

- Extract learning even from neutral outcomes – sometimes discovering what doesn’t matter is valuable

Remember that inconclusive doesn’t always mean failure. A skincare brand tested extensive product descriptions against shorter ones and found virtually identical performance. This “non-result” actually saved them significant content creation costs going forward, as they learned brevity worked just as well for their audience.

Advanced Analysis Techniques for Shopify Stores

Beyond basic conversion metrics, these advanced analysis approaches can uncover deeper insights from your test data:

Segmentation analysis reveals how different visitor groups respond to your test variants. The same change might yield dramatically different results across segments. Essential segmentations for Shopify stores include:

- Traffic sources: Paid social visitors often behave differently than organic search traffic

- Device types: Mobile conversion patterns frequently differ from desktop

- Geographic regions: International customers may respond differently than domestic ones

A footwear brand discovered their simplified checkout process increased conversions by 5% overall – but when segmented, showed a remarkable 22% improvement for mobile users while actually decreasing desktop conversions by 3%. This insight led them to implement device-specific checkout flows, maximizing overall conversion lift.

Cohort analysis examines how different customer groups respond based on when and how they were acquired. Particularly valuable segmentations include:

- Purchase history: First-time buyers vs. repeat customers

- Customer lifetime value: High-value vs. average customers

- Loyalty program status: Program members vs. non-members

A beauty retailer’s product page test showed minimal overall impact, but cohort analysis revealed that first-time visitors converted 18% better while returning customers actually converted 10% worse. The insight? New customers needed more information and reassurance, while returning customers found the additional content distracting. The solution was personalizing page content based on customer status – a sophistication that would have been missed without cohort analysis.

Multi-goal analysis evaluates performance across various conversion points, not just the primary goal. Consider analyzing:

- Primary and secondary conversions: Purchases, email signups, account creations

- Micro-conversions: Product views, add-to-carts, wishlist additions

- Customer journey touchpoints: How changes affect the entire path to purchase

A home decor store tested a new product recommendation algorithm that didn’t significantly impact their primary purchase conversion rate. However, multi-goal analysis revealed it increased average order value by 12% and product views per session by 34%. These secondary benefits justified implementing the change despite the neutral primary metric result.

The most valuable insights often hide in segmented data rather than overall results. Always dig deeper than surface-level metrics to extract the full value from your testing program.

Translating A/B Test Results into Actionable Shopify Improvements

Identifying winning tests is just half the battle. Implementing those wins effectively – and learning from losses – is where the real value of A/B testing materializes. Let’s explore how to turn test insights into tangible store improvements.

Implementation Strategies for Winning Variants

When your test yields a clear winner, you’ll face a critical decision: how quickly and broadly should you roll out the change? Consider these implementation approaches:

Full implementation makes sense when:

- The improvement is substantial (10%+ lift)

- Statistical confidence is very high (97%+)

- The change is low-risk (content, visual elements, etc.)

- No significant negative impact appears in any important segment

Phased implementation is safer when:

- The change affects critical pathways like checkout

- Different segments show varying results

- The change is complex or resource-intensive

- You want additional validation before full commitment

A jewelry store tested a streamlined checkout that showed a 12% conversion improvement. Rather than immediately rolling it out to all traffic, they implemented it for 25% of visitors initially, then 50%, then 75%, monitoring performance at each stage. This cautious approach caught an unexpected compatibility issue with certain payment processors that wasn’t evident during testing, allowing them to fix it before affecting all customers.

For Shopify stores specifically, implementation often involves theme file modifications. Keep these technical considerations in mind:

- Make changes in a duplicate theme first to avoid disrupting the live store

- Document all modifications with clear comments in code

- Test thoroughly across devices and browsers before going live

- Consider using apps that allow section-specific modifications

After implementation, establish a monitoring procedure to verify that the change performs as expected in the wild. Set up dashboards tracking key metrics, and schedule check-ins at 24 hours, one week, and one month post-implementation.

Finally, document your wins properly. Create a repository of test results that captures:

- The original hypothesis and rationale

- Test design and duration

- Key results with statistical confidence

- Implementation details

- Long-term performance impact

This documentation becomes invaluable as your testing program matures, creating an institutional knowledge base that informs future tests and prevents repeated mistakes.

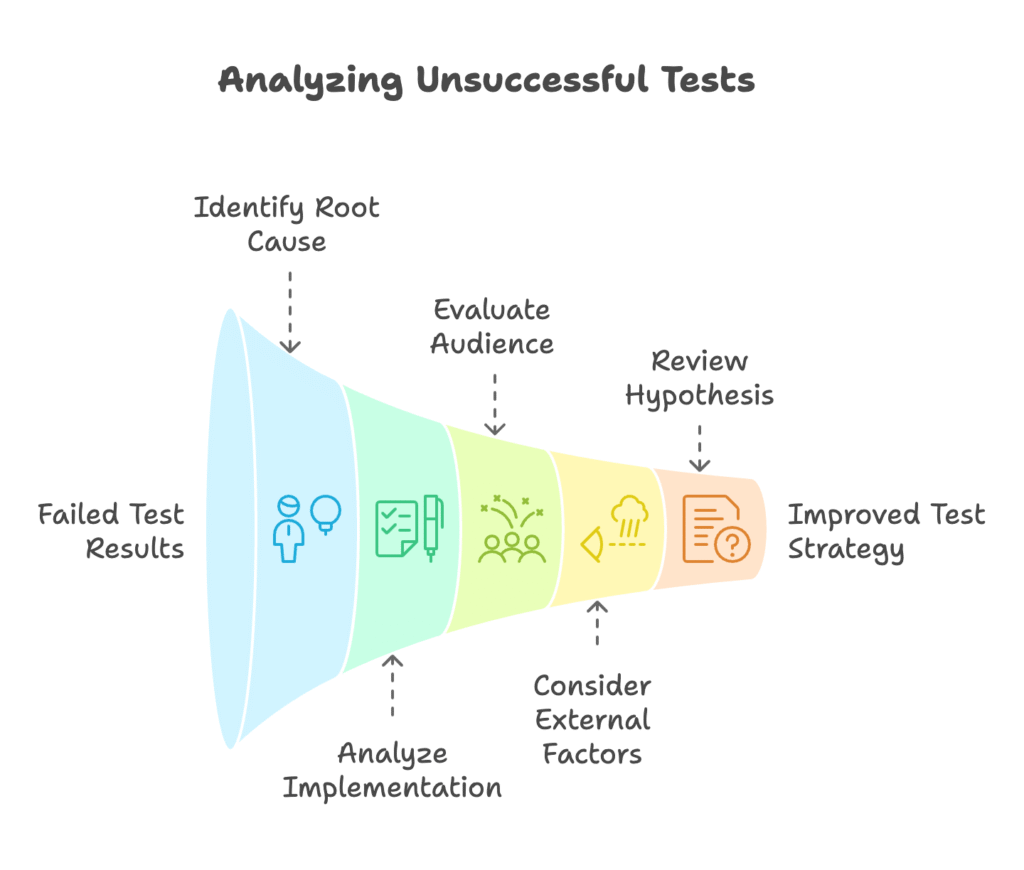

Learning from Unsuccessful Tests

“Failed” tests often contain the most valuable insights – if you know how to extract them.

When a test doesn’t yield the expected improvement, follow this analysis process:

Root cause analysis helps determine why your test underperformed:

- Implementation issues: Was the test executed correctly?

- Audience mismatch: Did you test with the wrong segment?

- External factors: Did unusual events contaminate results?

- Hypothesis flaws: Was your foundational assumption incorrect?

A fashion retailer tested prominent trust badges on product pages, expecting improved conversions. The test showed no impact. Digging deeper through session recordings, they discovered visitors rarely scrolled down far enough to see the badges. The hypothesis was sound, but the implementation (badge placement) was flawed. Moving the badges higher on the page in a follow-up test yielded the expected conversion lift.

Iterative testing approaches use “failed” tests as stepping stones:

- Refine hypotheses based on what you learned

- Consider A/A testing to verify your testing setup

- Test incremental changes rather than drastic overhauls

After a complete product page redesign test showed negative results, a health supplement company took a more methodical approach. Instead of another complete redesign, they tested individual elements from the failed variant – images, layout, and copy – separately. They discovered that while customers disliked the new layout, they responded positively to the new product images. This incremental approach salvaged value from the initial “failure.”

Even unsuccessful tests reveal valuable customer insights:

- Preference patterns: What do customers consistently respond to or reject?

- Behavior trends: How do customers interact with different elements?

- Expectation indicators: What expectations do customers bring to your store?

An electronics retailer tested a simplified product specification display, assuming customers wanted less technical information. The test showed significantly lower conversions. Customer behavior analysis revealed shoppers were actually leaving to search for more detailed specs elsewhere. This “failed” test uncovered a critical insight: their audience valued comprehensive technical information, contradicting conventional wisdom about simplification. Their next test – enhancing and better organizing specification data – drove a 14% conversion increase.

Remember: In A/B testing, there are no true failures – only opportunities to better understand your customers.

Advanced A/B Testing Strategies for Shopify Growth

As your testing program matures, simple A/B tests may no longer yield the substantial gains you’re seeking. These advanced testing methodologies can help break through performance plateaus and unlock deeper optimization opportunities.

Multivariate Testing for Complex Optimizations

Unlike standard A/B tests that change one element at a time, multivariate testing (MVT) examines how multiple elements interact when changed simultaneously. This approach is particularly valuable when:

- You’re planning comprehensive page redesigns

- Multiple elements might have interdependent effects

- You need to optimize several components within a limited timeframe

For example, rather than testing product image size, call-to-action button color, and price display separately (requiring three sequential tests), MVT tests all possible combinations simultaneously. This not only saves time but reveals interaction effects that sequential testing might miss.

A home goods retailer discovered through MVT that while a green “Add to Cart” button outperformed orange when paired with lifestyle product images, the orange button actually performed better when paired with product-only images. This interaction would have been invisible with traditional A/B testing.

However, MVT comes with significant requirements:

- Substantial traffic volume: Testing multiple variations simultaneously demands much larger sample sizes

- Longer test durations: Reaching statistical significance takes more time

- More complex analysis: Interpreting interaction effects requires deeper statistical understanding

For most Shopify stores, I recommend MVT only when you have at least 10,000 weekly visitors to the page being tested. Smaller stores should stick with sequential A/B testing for more reliable results.

When analyzing MVT results, look beyond which specific combination performed best. The real value lies in understanding how elements interact – information that guides future design decisions across your entire store.

Sequential and Adaptive Testing Methods

As your testing program evolves, consider these sophisticated approaches that build upon basic A/B testing:

Sequential testing creates a structured progression of tests, with each building on insights from the last. Instead of isolated experiments, you develop a testing roadmap where each test informs the next.

A successful sequential testing flow might look like this:

- Test product page headline variations to identify messaging that resonates

- Use winning messaging to develop and test different visual presentations

- Combine winning message and visuals to test call-to-action variations

- Apply learnings to develop and test an optimized overall layout

Each test builds on previous insights, creating cumulative improvements that often exceed what individual tests could achieve.

Multi-arm bandit testing offers a dynamic alternative to traditional fixed-split testing. Instead of maintaining a static traffic allocation throughout the test, multi-arm bandit algorithms automatically shift traffic toward better-performing variants as data accumulates.

The advantages are clear:

- More visitors experience the better-performing version, increasing overall conversions during testing

- Poorly performing variants get less exposure, reducing potential revenue loss

- Tests can run continuously, with new challengers introduced without restarting

A beauty brand implemented multi-arm bandit testing for their homepage hero section, continuously testing five different value propositions. The algorithm automatically directed more traffic to the best-performing variants while still collecting data on all options. This approach generated 9% more revenue during the testing period compared to a traditional A/B test with fixed traffic splits.

For Shopify stores, multi-arm bandit testing is available through third-party tools that integrate with the platform. It’s particularly valuable for high-traffic pages where opportunity cost during testing is substantial.

Personalization testing takes segmentation to the next level by testing which experiences work best for specific customer groups. Rather than finding a single “best” version for everyone, this approach identifies optimal experiences for different segments.

Effective personalization testing might explore:

- Different product recommendations for first-time versus repeat customers

- Varied messaging for visitors from different traffic sources

- Custom layouts based on previous browsing or purchase behavior

A fashion retailer tested personalized homepage content based on previous purchase categories. New visitors saw a general bestsellers display, while returning customers saw content tailored to categories they’d previously browsed. This personalized approach increased repeat customer conversion rates by 26% compared to the one-size-fits-all control.

As your testing sophistication grows, remember that advanced methods should complement, not replace, foundational A/B testing. The core principles of clear hypotheses, adequate sample sizes, and rigorous analysis remain essential regardless of methodology.

Building a Data-Driven Testing Culture for Long-Term Shopify Success

Individual tests create short-term wins, but a systematic testing program drives sustainable growth. Let’s explore how to transform occasional testing into a core business practice that consistently improves your Shopify store.

Establishing Testing Processes and Workflows

Effective testing programs require structure. Ad-hoc testing yields ad-hoc results. Instead, develop these foundational elements:

A testing calendar ensures consistency and proper resource allocation. It should include:

- Regular testing schedules (e.g., launching new tests every two weeks)

- Seasonal considerations (planning tests around major shopping periods)

- Coordination with marketing campaigns to prevent conflicting initiatives

A home goods retailer mapped their annual testing calendar to align with their business cycle – running checkout optimization tests before Black Friday, product page tests during January when introducing new collections, and email capture tests during slower summer months. This strategic timing maximized impact while ensuring tests didn’t disrupt peak selling periods.

Cross-functional involvement strengthens your testing program by incorporating diverse perspectives. Establish clear roles for team members:

- Test owners: Responsible for hypothesis development and overall execution

- Designers/developers: Create and implement test variations

- Analysts: Evaluate results and extract insights

- Stakeholders: Provide domain expertise and approval when needed

Create a standardized process flow that includes:

- Hypothesis formulation and approval

- Test design and development

- QA and pre-launch verification

- Test execution and monitoring

- Analysis and insight development

- Implementation planning for winners

- Documentation and knowledge sharing

A jewelry store instituted a weekly testing standup meeting where team members shared current test status, discussed upcoming initiatives, and reviewed recent results. This regular touchpoint kept optimization top-of-mind across departments and ensured testing momentum continued even during busy periods.

Documentation and knowledge management preserve insights and prevent repeating mistakes. Create standardized templates for:

- Test plans that clearly outline hypotheses, variables, and success metrics

- Results reports that document findings, interpretations, and next steps

- A centralized repository of all test history, accessible to the entire team

A fashion retailer created a simple “test card” template that captured the essence of each test on a single page: hypothesis, key metrics, results, and learnings. These cards, stored in a shared digital workspace, became an invaluable reference that new team members could quickly review to understand past optimization efforts.

Measuring and Communicating Testing Program ROI

Testing programs require resources. Demonstrating their value ensures continued support and investment. Here’s how to quantify and communicate testing impact:

Calculate testing program value by tracking these metrics:

- Cumulative revenue impact: Additional revenue generated from implemented test wins

- Return on investment: Revenue gains compared to program costs

- Win rate: Percentage of tests that yield implementable improvements

- Average impact per successful test: Typical lift from winning tests

A beauty brand calculated that their testing program generated $430,000 in incremental annual revenue from a $60,000 investment in tools and resources – a 7.2x ROI. This concrete value demonstration secured executive support for expanding their testing team.

Reporting outcomes effectively ensures stakeholders understand and appreciate testing value:

- Create executive dashboards that highlight business impact metrics, not just test details

- Use visual representations to make results immediately comprehensible

- Frame outcomes as business stories rather than technical reports

Instead of reporting “5.2% improvement in add-to-cart rate with 97% confidence,” a home goods retailer translated this to “Our product page test will generate approximately $157,000 in additional annual revenue based on current traffic levels.” This business-oriented framing resonated much more effectively with executive stakeholders.

Scaling testing efforts should be based on demonstrated results:

- Allocate resources based on ROI from different test types and page areas

- Invest in more sophisticated tools as your program matures

- Develop team capabilities through training and selective hiring

A fashion retailer started with basic A/B testing on product pages, then systematically expanded their program as results proved valuable. They progressively added checkout testing, personalization capabilities, and eventually built a dedicated three-person optimization team – each expansion justified by quantifiable returns from previous testing phases.

The most successful Shopify stores don’t just run tests – they build testing into their organizational DNA. Every major decision becomes an opportunity to gather data, every feature launch becomes a test, and every team member develops a data-driven mindset that continually drives store improvement.

When testing becomes cultural rather than occasional, your Shopify store gains a sustainable competitive advantage that compounds over time through better product pages, more efficient checkouts, more compelling messaging, and ultimately, happier customers who convert at higher rates.

References

- Shopify A/B Testing: How To Increase Conversion Rate. (2025, February 14). Replo Blog. https://www.replo.app/blog/shopify-ab-testing

- Taking Shopify A/B testing to the next level. (2025, April 28). Instant. https://instant.so/blog/taking-shopify-a-b-testing-to-the-next-level

- A/B Testing Shopify: Boost Your Conversions with Data-Driven Decisions. (2024, November 6). Checkout Links. https://checkoutlinks.com/blog/a-b-testing-shopify-boost-your-conversions-with-data-driven-decisions/

- Christian, S. (2024, May 24). Elevate Your Shopify A/B Testing Strategies. LinkedIn. https://www.linkedin.com/pulse/elevate-your-shopify-ab-testing-strategies-sweety-christian-ugfsc

- Shopify A/B Testing: Step-by-Step Beginner’s Guide. (2024, April 16). Getsitecontrol. https://getsitecontrol.com/blog/shopify-ab-testing/

Ready to supercharge your Shopify store sales with perfectly optimized data-driven campaigns? Growth Suite is a free Shopify app that helps you run effective on-site discount and email collection campaigns using advanced data analytics. It analyzes customer behavior to deliver personalized offers at the perfect moment, increasing conversions and revenue. Install Growth Suite with a single click and start making smarter, data-backed decisions that drive real results!