Why A/B Testing Matters for Shopify Store Owners

Ever wonder why some Shopify stores consistently outperform their competitors? Behind almost every successful e-commerce business lies a secret weapon: systematic A/B testing. This isn’t just another marketing buzzword—it’s the difference between guessing what works and knowing what works.

Picture this: You’ve just spent hours redesigning your product page. The new layout looks fantastic to you, but after launch, your conversion rate drops by 15%. Without A/B testing, you might never have known that your beautiful new design was actually costing you sales.

What makes A/B testing so powerful for Shopify store owners? It transforms abstract theories about customer behavior into concrete, actionable insights. Instead of making changes based on hunches or following generic “best practices,” you make decisions backed by your own customer data.

The Role of A/B Testing in Shopify Store Growth

A/B testing is your shield against costly mistakes and your pathway to continuous improvement. By comparing two versions of a webpage or element, you can determine which performs better for your specific audience. This methodical approach drives growth in several key ways:

First, it validates changes before full implementation. That product description rewrite you’re considering? Test it with a portion of your traffic before rolling it out storewide. The results might surprise you—and save you from a potential conversion disaster.

Second, it dramatically reduces guesswork. Instead of wondering whether green or red buttons drive more clicks, you’ll have definitive data. This maximizes ROI on your design and marketing efforts by ensuring you implement only the changes that actually improve performance.

Unique Advantages of Shopify’s Ecosystem for A/B Testing

Shopify store owners enjoy distinct advantages when it comes to testing. The platform’s robust app ecosystem offers specialized testing tools that integrate seamlessly with your store. These purpose-built solutions eliminate the technical hurdles that often discourage store owners from testing regularly.

What’s more, Shopify’s architecture enables rapid deployment and measurement of changes. Want to test a new checkout flow? With the right app, you can have your experiment running in minutes rather than days, accelerating your learning cycle and competitive edge.

Who Should Care: Audience Segmentation

Whether you launched your store last week or you’re managing a seven-figure operation, A/B testing offers specific benefits tailored to your experience level:

For Starter and Beginner Shopify users, foundational testing establishes best practices early. Even simple tests like headline variations or product image layouts can yield significant improvements when you’re building from the ground up.

For Intermediate store owners, structured experimentation becomes a pathway to optimization and scaling. You’ve got the basics down, and now you’re ready to refine your funnel through systematic testing.

For Advanced Shopify entrepreneurs, sophisticated multi-layered testing enables strategic growth. You might run concurrent experiments across different segments of your traffic, combining insights to inform broader business decisions.

But here’s the reality check: even experienced Shopify merchants make critical testing mistakes that undermine their results. Let’s explore these pitfalls and how to avoid them, starting with the most fundamental errors.

Fundamental A/B Testing Mistakes on Shopify

Testing Too Many Elements at Once

Imagine you’re a detective trying to solve a mystery. You interview five suspects simultaneously, asking them all different questions. When you get an important clue, how will you know which suspect provided it? This is precisely the problem with testing multiple elements at once.

Yet it happens constantly. A store owner changes the headline, button color, and product images in a single test. When conversion rates improve (or decline), which change made the difference? It’s impossible to know.

The Problem with Multivariate Changes

When you modify several elements simultaneously, you create what testing experts call “confounding variables.” These multiple changes obscure which specific variable drove the result.

Consider this real-world example: A fashion boutique owner updated their product page with a new headline (“Limited Edition Collection” instead of “New Arrivals”), changed the Add to Cart button from green to red, and swapped lifestyle product images for white-background photos—all in one test.

The result? A 22% conversion increase. Exciting, but essentially useless for future optimization because the owner couldn’t isolate which change (or combination of changes) created the improvement.

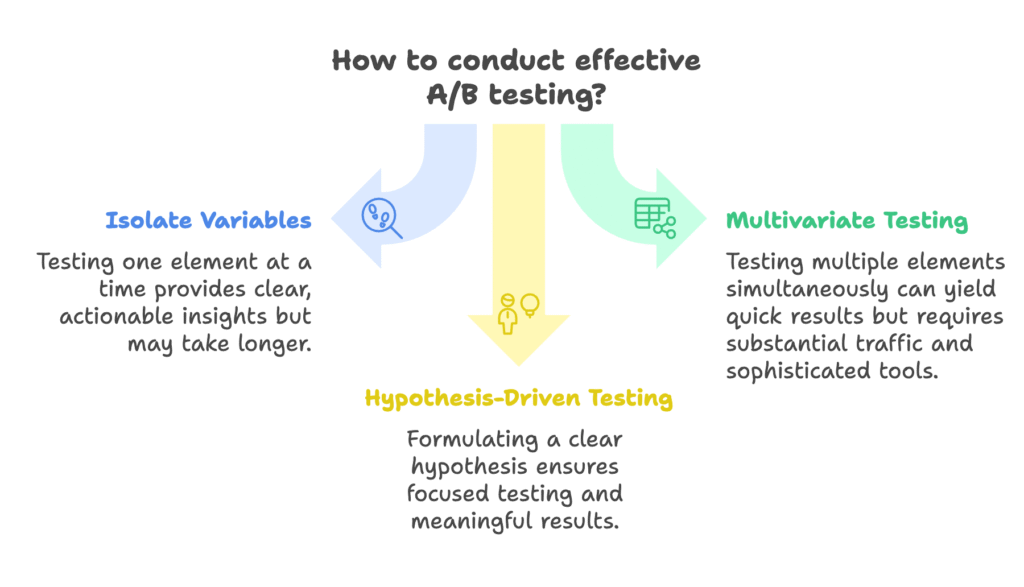

Best Practice: Isolate Variables

Test one element per experiment for clear, actionable insights. This approach might seem slower initially, but it builds a foundation of reliable knowledge that compounds over time.

If you absolutely must test multiple elements simultaneously (perhaps due to seasonal time constraints), use proper multivariate testing tools. These sophisticated solutions are designed to isolate the impact of individual changes, but they require substantial traffic to generate reliable results.

Starting Without a Clear Hypothesis

Would you begin a road trip without deciding on a destination? Surprisingly, many store owners launch A/B tests without a clear hypothesis—essentially testing blind.

The Risk of Random Changes

Unfocused tests typically yield inconclusive results and waste precious time and traffic. I’ve seen store owners randomly change button colors or move elements around “just to see what happens.” This approach rarely delivers meaningful insights.

Without a hypothesis, you also lack context for interpreting results. Was that 5% conversion increase significant or just statistical noise? Without an expected outcome, it’s hard to tell.

Best Practice: Hypothesis-Driven Testing

For each test, formulate a specific, measurable hypothesis. This should follow a simple format:

“We believe that [change] will result in [outcome] because [rationale].”

For example: “We believe that adding trust badges near the Add to Cart button will increase conversion rate by at least 5% because it will address security concerns that customers have expressed in exit surveys.”

Document your hypothesis before launching each test. This practice not only clarifies your thinking but also creates a valuable record for future reference. When tests conclude, compare results against your predictions to refine your understanding of customer behavior.

With these foundational mistakes covered, let’s examine the statistical pitfalls that often undermine even well-conceived tests.

Statistical and Data-Related Pitfalls

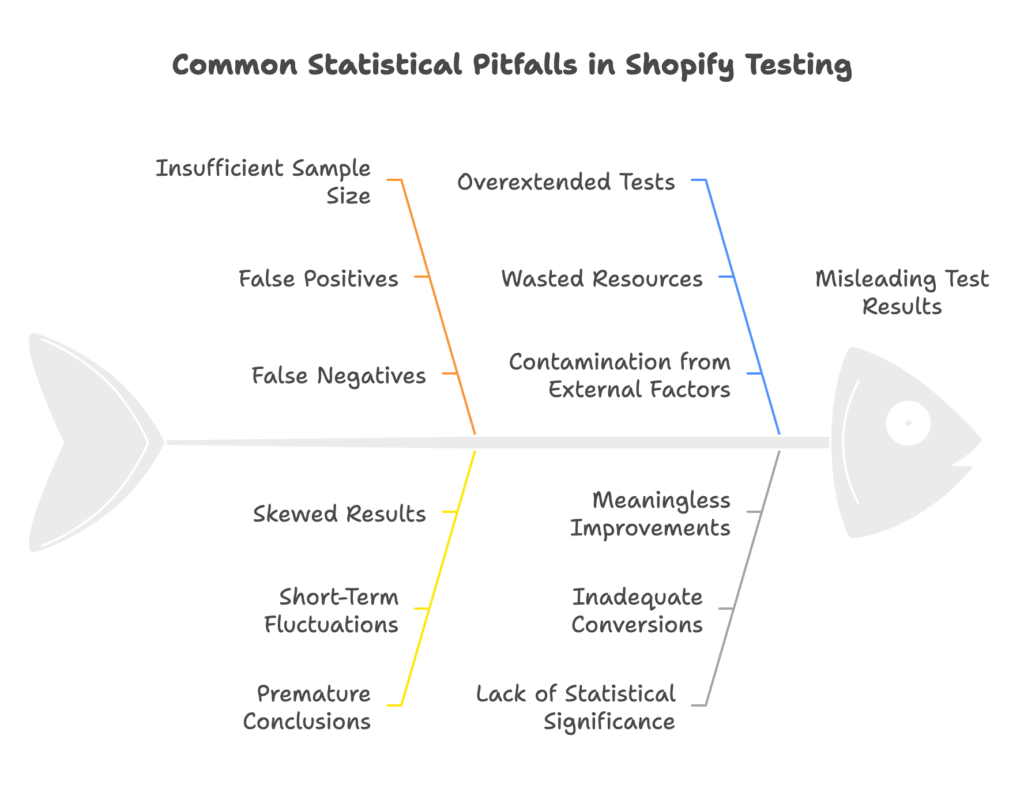

Insufficient Sample Size

Statistical significance isn’t just jargon—it’s the difference between reliable insights and costly mirages. Yet many Shopify store owners jump to conclusions based on woefully inadequate data.

Small Samples Lead to Misleading Results

Working with small sample sizes dramatically increases your risk of false positives and false negatives. A false positive occurs when random chance creates an apparent winner that wouldn’t hold up with more data. A false negative happens when a truly superior variation appears ineffective due to insufficient testing.

I’ve witnessed store owners declare a “winning” variation after just a few hundred visitors, only to watch that “improvement” vanish when implemented fully. One apparel store owner changed their checkout button based on a test with 250 visitors per variation, then suffered a 3% conversion drop when applied storewide.

Best Practice: Calculate and Reach Statistical Significance

Before launching any test, determine the minimum sample size required using an online calculator. These tools consider your current conversion rate, the minimum improvement you want to detect, and your desired confidence level.

As a general rule, most Shopify stores should aim for at least 100 conversions (not just visitors) per variation before drawing conclusions. Low-traffic stores may need to run tests longer or focus on testing high-traffic pages first.

Remember that statistical significance doesn’t guarantee a meaningful business impact. A 0.1% conversion improvement might be statistically significant with enough traffic, but not worth implementing if the change requires substantial resources.

Ending Tests Too Early or Letting Them Run Too Long

Timing is everything in A/B testing. End a test too soon, and you risk acting on statistical flukes. Let it run too long, and you waste opportunities while potentially introducing new variables.

Premature Conclusions

Early results often reflect short-term fluctuations rather than true performance differences. The excitement of seeing an apparent improvement can tempt store owners to stop tests prematurely.

One electronics retailer ended a pricing display test after just four days when the variation showed a 15% conversion increase. Upon implementation, the “improvement” disappeared entirely—the early results had been skewed by a weekend promotion.

Overextending Tests

Conversely, running tests indefinitely creates problems too. Beyond wasting resources, extended tests risk contamination from seasonal changes, marketing activities, or competitor actions.

A test that runs through both regular weeks and holiday shopping periods, for instance, may yield results that aren’t applicable to either time frame.

Best Practice: Optimal Test Duration

Strike the right balance by following these guidelines:

- Run tests for a minimum of one to two complete business cycles (usually 1-2 weeks) to capture day-of-week variations

- Continue until you reach statistical significance or your predetermined sample size

- Account for natural traffic fluctuations by ensuring your test period includes both weekdays and weekends

- End tests before major seasonal shifts or marketing campaigns that could skew results

Pre-determine your test duration and stopping criteria before launch to avoid the temptation to end tests based on early results.

Now that we’ve covered these statistical pitfalls, let’s examine implementation mistakes that are particularly relevant to Shopify stores.

Implementation Mistakes Specific to Shopify Stores

Not Using the Right A/B Testing Tools or Apps

Shopify’s app ecosystem offers powerful testing solutions, yet many store owners resort to makeshift methods that compromise reliability and efficiency.

Relying on Manual or Inadequate Methods

I’ve seen store owners attempt to conduct A/B tests by manually switching between page versions on alternate days or by comparing periods before and after a change. These approaches introduce countless variables and make accurate analysis nearly impossible.

Others rely solely on basic analytics without proper split-testing functionality. This creates inconsistent user experiences and fails to account for variables like traffic source, time of day, or device type.

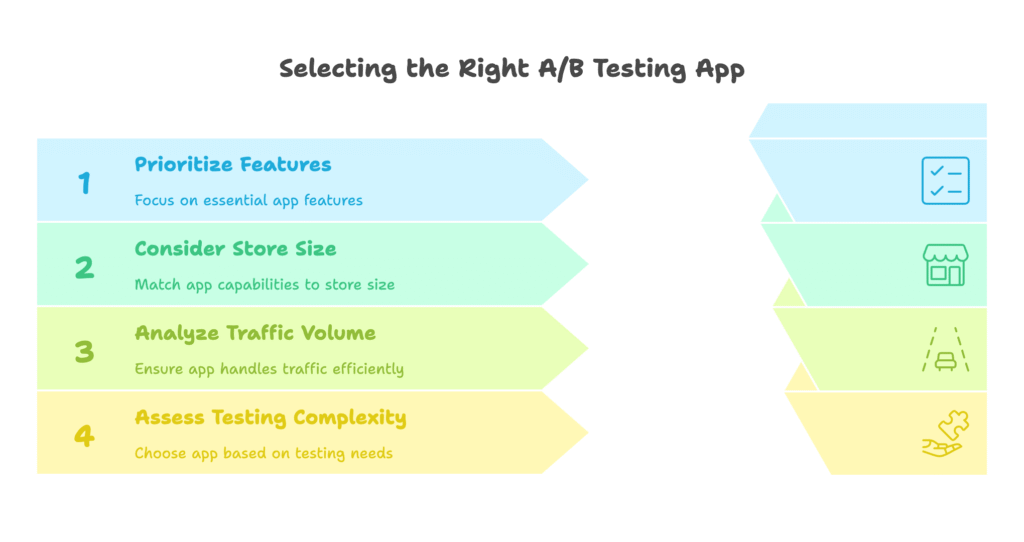

Best Practice: Leverage Shopify-Compatible A/B Testing Apps

Invest in dedicated testing tools from the Shopify App Store. These solutions handle the technical aspects of visitor allocation, data collection, and statistical analysis—allowing you to focus on crafting effective tests.

When evaluating testing apps, prioritize these features:

- Visual editors that allow changes without coding

- Visitor segmentation capabilities (by device, location, source, etc.)

- Statistical significance calculations

- Integration with your Shopify analytics

- Minimal impact on page load speed

Popular options include Google Optimize (free but being discontinued), Convert Experiences, and AB Tasty, though availability may change over time. The right tool depends on your store’s size, traffic volume, and testing complexity.

Neglecting Audience Segmentation

Not all visitors are created equal. A change that delights new customers might frustrate returning ones. Mobile users behave differently than desktop shoppers. Yet many store owners test as if their audience were a monolithic entity.

One-Size-Fits-All Testing

Testing without segmentation often leads to misleading aggregate results that mask important differences between audience groups.

Consider this scenario: A home goods store tested a simplified product page that removed detailed specifications. Overall, the test showed no significant difference in conversion rate. However, when analyzed by traffic source, the simplified page performed 24% better with social media visitors but 18% worse with search engine traffic.

Had the store implemented the change based on aggregate results, they would have missed an opportunity to tailor the experience to each traffic segment.

Best Practice: Segment and Analyze Results by Audience

Use Shopify analytics and your testing tool’s segmentation features to analyze results across different customer groups:

- New vs. returning customers

- Mobile vs. desktop users

- Traffic source (organic, paid, social, email, etc.)

- Geographic location

- Previous purchase behavior

When sample sizes allow, look for significant performance differences between segments. These insights often reveal opportunities for personalized experiences that dramatically outperform one-size-fits-all approaches.

If your traffic volume doesn’t support full segmentation, consider running targeted tests for your most valuable segments rather than testing your entire audience at once.

With these implementation considerations in mind, let’s explore how external factors can undermine even well-executed tests.

External Factors and Contextual Errors

Ignoring Seasonality and External Influences

A/B tests don’t happen in a vacuum. External factors can dramatically skew results, yet many Shopify merchants fail to consider these influences when planning and analyzing their experiments.

Overlooking Holidays, Promotions, or Campaigns

External events create temporary shifts in traffic quality, visitor intent, and buying behavior that can render test results unreliable for normal conditions.

A kitchenware store learned this lesson the hard way after testing a streamlined checkout process during their Black Friday sale. The variation showed a substantial conversion increase, but when implemented permanently, the improvement vanished. The holiday shopping urgency had masked usability issues that became apparent during regular shopping periods.

Similarly, running tests while email campaigns or social media promotions are active can attract atypical visitors who behave differently than your usual traffic.

Best Practice: Control for and Document External Factors

Mitigate these risks by carefully timing your tests:

- Schedule important tests during “typical” business periods whenever possible

- Avoid major holidays, promotional events, and unusual external circumstances

- If testing during promotional periods is unavoidable, ensure both variations are exposed equally to the same conditions

- Maintain a detailed log of any external events that could influence results

When analyzing results, consider whether external factors might have impacted performance. In some cases, you may need to extend or repeat tests during more representative periods.

Failing to Document Tests and Results

Testing creates valuable institutional knowledge—but only if properly documented. Without systematic record-keeping, insights fade and mistakes repeat.

Lack of Institutional Knowledge

I’ve consulted with Shopify stores that repeatedly tested the same elements because they couldn’t remember previous results. Others implemented changes that contradicted successful past tests because that knowledge wasn’t preserved when team members changed.

One cosmetics brand tested four different product page layouts over two years—eventually circling back to their original design after forgetting the results of their initial tests. This wasted time, traffic, and implementation resources that could have been directed toward new opportunities.

Best Practice: Maintain a Testing Log

Create and maintain a detailed testing log that captures:

- Test hypothesis and rationale

- Screenshots or descriptions of all variations

- Start and end dates

- Traffic volume and conversion counts per variation

- Statistical significance and confidence level

- Segmented results (if applicable)

- Conclusions and next steps

- Implementation status

This documentation becomes increasingly valuable as your testing program matures. It prevents repeated mistakes, builds on previous learnings, and preserves insights when team members change.

A simple spreadsheet works for early efforts, but dedicated testing platforms often include built-in documentation features that streamline this process.

Now let’s examine more sophisticated mistakes that affect even experienced testers.

Advanced A/B Testing Mistakes and How to Avoid Them

Misinterpreting Data and Overvaluing Small Differences

As testing programs mature, the risks shift from obvious methodological errors to subtle misinterpretations that can misdirect optimization efforts.

Chasing “Wins” That Aren’t Statistically Significant

In the quest for continuous improvement, it’s tempting to implement any variation that shows positive results—even when those differences aren’t statistically significant.

A fashion retailer once implemented a new product filtering system based on a 0.5% conversion increase with only 80% confidence. Not only did the change require substantial development resources, but the “improvement” disappeared entirely with full implementation. The apparent difference had been statistical noise rather than a genuine performance improvement.

Similarly, overemphasizing small but statistically significant differences can divert resources from more impactful opportunities. A 0.2% conversion increase might be mathematically valid but practically meaningless compared to other potential improvements.

Best Practice: Use Statistical Significance Calculators

Maintain disciplined standards for implementation:

- Only implement changes that reach your predetermined confidence threshold (typically 95% or higher)

- Prioritize changes with meaningful business impact, not just statistical significance

- Consider the implementation cost relative to the expected benefit

- For close results, consider running follow-up tests with refined variations

Remember that statistical significance indicates reliability, not importance. A minor change with high confidence might be less valuable than pursuing a potentially larger improvement in a different area.

Not Iterating or Building on Previous Tests

The most sophisticated testing programs build systematically on previous insights, yet many Shopify merchants treat each test as an isolated event.

Treating Testing as a One-Off Activity

Random, disconnected tests fail to leverage the compounding value of iterative optimization. Each test should inform future experiments, creating a progression of improvements rather than a series of unrelated changes.

I’ve observed stores that successfully tested headline improvements, then moved on to completely different elements without exploring further headline refinements. This abandons the opportunity to build on initial success with additional iterations that could multiply the initial improvement.

Best Practice: Continuous Optimization

Create a strategic testing roadmap that builds on previous insights:

- When a test produces a winner, consider running follow-up tests that refine that improvement

- Use insights from unsuccessful tests to inform new hypotheses

- Maintain a prioritized backlog of test ideas based on previous results

- Periodically revisit high-impact elements as your audience and offerings evolve

This iterative approach compounds your gains over time. A series of 5% improvements eventually delivers far greater results than a single 15% win.

Foster a culture of ongoing experimentation within your team by celebrating both successful tests and the valuable learnings from unsuccessful ones. Testing should be a core business process, not a periodic project.

With these advanced pitfalls addressed, let’s turn to practical next steps for implementing effective testing on your Shopify store.

Practical Next Steps for Shopify Store Owners

Checklist: Setting Up a Reliable A/B Testing Program on Shopify

Whether you’re just starting with testing or refining an existing program, this checklist will help you establish a robust testing practice:

- Define clear goals and hypotheses for each test

- Identify specific metrics you aim to improve (conversion rate, average order value, etc.)

- Formulate explicit hypotheses using the “We believe that [change] will result in [outcome] because [rationale]” format

- Prioritize tests based on potential impact and implementation effort

- Select appropriate Shopify A/B testing tools or apps

- Evaluate options based on your store’s traffic volume and testing complexity

- Ensure the tool integrates with your Shopify theme and any critical apps

- Verify that the solution provides statistical significance calculations

- Test one element at a time and segment your audience

- Isolate variables to generate clear, actionable insights

- Segment results by key audience characteristics when sample size permits

- Consider targeted tests for specific high-value segments

- Calculate required sample size and run tests for optimal duration

- Use sample size calculators to determine minimum traffic requirements

- Run tests for at least one full business cycle (typically 1-2 weeks)

- Continue until reaching statistical significance or predetermined sample size

- Document every test and analyze results rigorously

- Maintain a comprehensive testing log with hypotheses, variations, and results

- Analyze both quantitative metrics and qualitative insights

- Share learnings with your team to build institutional knowledge

- Iterate based on learnings for continuous improvement

- Build follow-up tests based on previous results

- Refine successful variations with additional iterations

- Use insights from unsuccessful tests to inform new hypotheses

Resources for Further Learning

Deepen your testing expertise with these valuable resources:

- Shopify’s official A/B testing guides and help center provide platform-specific best practices and implementation advice

- Explore the Shopify App Store for specialized testing tools, focusing on those with strong reviews and active support

- Industry blogs like Convertize, CXL, and GoodUI offer case studies and advanced testing strategies applicable to e-commerce

Remember that effective A/B testing is both a science and an art. The science lies in rigorous methodology and statistical validity. The art comes from developing insightful hypotheses based on customer understanding and creative problem-solving.

By avoiding the common mistakes outlined in this guide, you’ll build a testing program that consistently delivers reliable, actionable insights—transforming your Shopify store into a continuously improving engine of growth.

References

- Shopify. “The Complete Guide to A/B Testing.” Shopify Blog. 2024-07-22. https://www.shopify.com/blog/the-complete-guide-to-ab-testing

- Rocket CRO Lab. “A/B Testing for Shopify Stores: Best Practices and Pitfalls You Need to Know.” 2025-01-15. https://www.rocketcrolab.com/post/a-b-testing-for-shopify-store-best-practices

- Checkout Links. “The Ultimate Guide to A/B Testing on Shopify.” 2024-10-15. https://checkoutlinks.com/blog/the-ultimate-guide-to-a-b-testing-on-shopify/

Ready to supercharge your Shopify store’s sales with perfectly optimized campaigns? Growth Suite helps you run effective on-site discount and email collection campaigns with a powerful AI-driven data engine. The app analyzes your products, collections, customers, and orders data to generate personalized, time-limited offers that maximize conversions. Install Growth Suite with a single click and start boosting your sales today—completely free during your 14-day trial!